To continue the Windows Azure Pack series, here is my next topic: Deployment Scenario’s on Nutanix

If you missed part 1 – see link below

Part 1 – Understanding Windows Azure Pack

Windows Azure Pack – Deployment Scenario’s

Terminology

Ok, Let’s start with some terminology used when talking about WAP(Windows Azure Pack). Here are two key terms you need to know:

- Administrator – Someone who deploys, configures and manages WAP and makes cloud services available to tenants.

- Tenant – Someone who subscribes to and uses cloud services made available through WAP.

When WAP is deployed by a hoster (service provider) the administrator refers to IT staff at the hoster while the tenants are the customers to which the hoster is selling cloud services. And when WAP is deployed in an enterprise datacenter, the administrator will be your own IT department; the tenants in this case will be the other departments, divisions, or business units within your organization that want to take advantage of the cloud services your IT department is offering.

Admin Portal

Admin Portal

User Signin Portal

User Signin Portal

User Main Portal

User Main Portal

Required components

WAP currently includes eight components. Two of these components are portals:

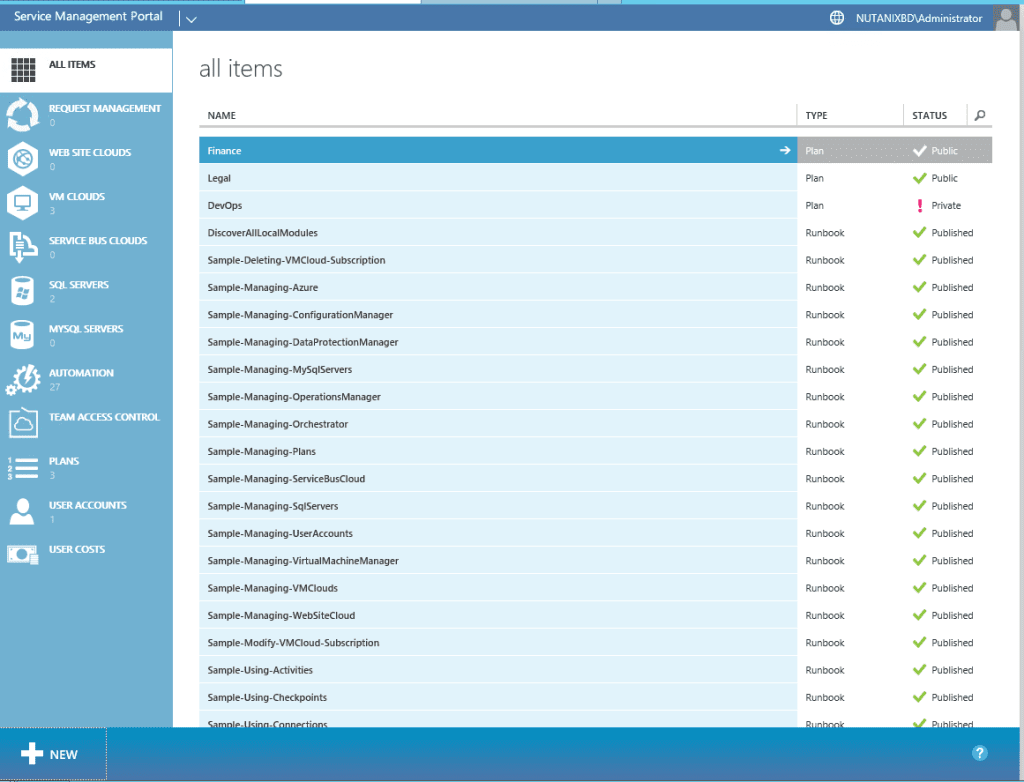

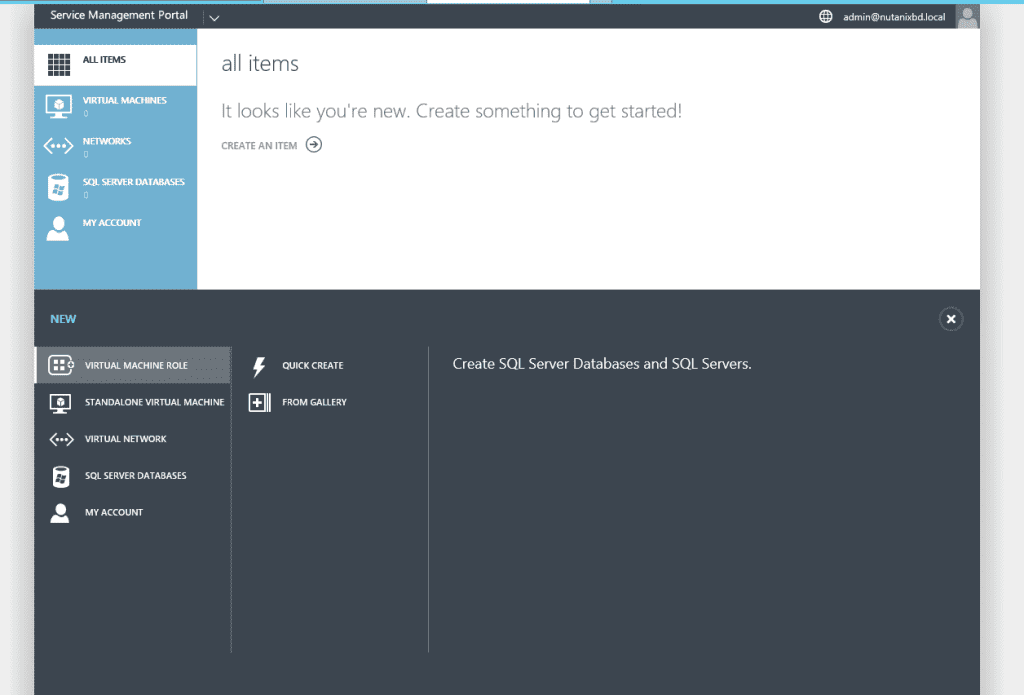

- Management Portal for Administrators – A web-based portal that lets administrators configure and manage user accounts, resource clouds, tenant offers, and so on.

- Management Portal for Tenants – A web-based self-service portal that lets tenants provision, monitor and manage the following cloud services: Web Sites, Virtual Machines, and Service Bus.

The self-service capabilities of the Management Portal for Tenants enables tenants to deploy and manage the cloud services they need when they need them without having to go through the slow procurement processes of the traditional approach to enterprise IT.

Authentication is another key feature of WAP to ensure that only properly authenticated administrators have access to the Management Portal for Administrators and only properly authenticated users have access to the Management Portal for Tenants. By default, the Management Portal for Administrators uses Windows authentication (Kerberos or NTLM) but you can optionally use Active Directory Federation Services (ADFS) for authentication purposes. The Management Portal for Tenants on the other hand uses the ASP.NET Membership Provider for authentication purposes. WAP includes two authentication sites, an Admin Authentication Site and a Tenant Authentication Site, for these purposes.

WAP also includes components that provide the following application programming interfaces (APIs):

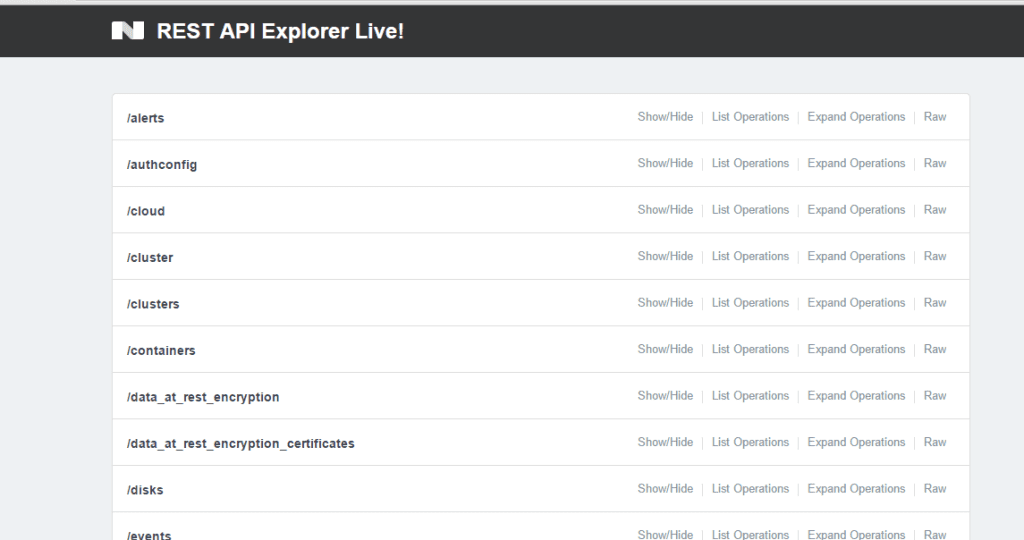

- Windows Azure Pack Admin API – Enables administration tasks to be performed using the Management Portal for Administrators and Windows PowerShell.

- Windows Azure Pack Tenant API – Enables tenant-specific tasks to be performed using the Management Portal for Tenants and Windows PowerShell.

- Windows Azure Pack Tenant Public API – Provides additional tenant-specific functionality primarily for hosting provider environments.

All of the above components are required in any WAP deployment.

Optional components

The following components of WAP may be deployed in order to offer additional forms of cloud services and other resources to tenants:

- Web Sites – Provides you with a managed web environment you can use to create new websites or migrate your existing business website into the cloud.

- Virtual Machines – Provides you with a general-purpose computing environment that lets you create, deploy, and manage virtual machines running in the Windows Azure cloud.

- Service Bus – Allows you to keep your apps connected across your private cloud environment and the Windows Azure public cloud.

- Automation and Extensibility – Allows you to automate and integrate custom services into your services framework using runbooks.

- SQL and MySQL – Allows you to provision Microsoft SQL and MySQL databases for tenants to use.

Windows Azure Pack – Deployment Scenario’s

There are two basic deployment scenarios for WAP:

- Express architecture – Recommended for proof of concept testing only.

- Distributed architecture – Recommended for production environments.

In addition, the distributed architecture can be implemented in various ways depending on the scale and degree of availability needed. Let’s briefly examine each of these scenarios.

Express architecture

In an express deployment of Windows Azure Pack, you install all of the required components on a single server and any optional components needed on one or more additional servers. This is the deployment I will be doing in the next part of the series. Specifically, the following required components must all be installed on your first server:

- Windows Azure Pack Admin API

- Windows Azure Pack Tenant API

- Windows Azure Pack Tenant Public API

- Admin Authentication Site

- Tenant Authentication Site

- Management Portal for Administrators

- Management Portal for Tenants

In addition, your first server must host the SQL Management Database used by the required components. This means you must install a required version of Microsoft SQL Server on the first server.

Distributed architecture

In a distributed deployment of WAP, you spread out the required components across multiple servers. There are many ways you can do this, but the following recommendations should generally be adhered to in order to ensure optional performance and supportability for your deployment:

- Install a management portal and its corresponding authentication site on the same server. For example, install the Management Portal for Administrators and the Admin Authentication Site on the same server.

- If you will be providing cloud services to tenants over the public Internet, install the following components on the same public-facing server:

- Management Portal for Tenants

- Tenant Authentication Site

- Windows Azure Pack Tenant Public API

- If Active Directory Domain Services (ADDS) is to be used for authentication purposes, install it on a separate identity server.

- If Active Directory Federation Services (ADFS) is to be used for authentication purposes, install it on a separate identity server along with an ADFS

- For greater scalability and high availability in large deployments, install the SQL Management Database on a separate failover cluster. In addition, use failover clustering for your public-facing servers and for the servers hosting your other required components.

- For even higher scalability, install each required component on a separate failover cluster and the SQL Management Database on another failover cluster. In other words, use eight failover clusters to deploy the seven required components plus the SQL Management Database. Check out Nutanix Best Practices guide for deploying SQL

In the next blog post in this series, we will begin our walk-through of installing and configuring WAP. I will focus primarily on the express deployment scenario in this series along with two types of cloud services: Virtual Machines and SQL Databases…………..Let’s build a cloud……

Until next time, Rob…

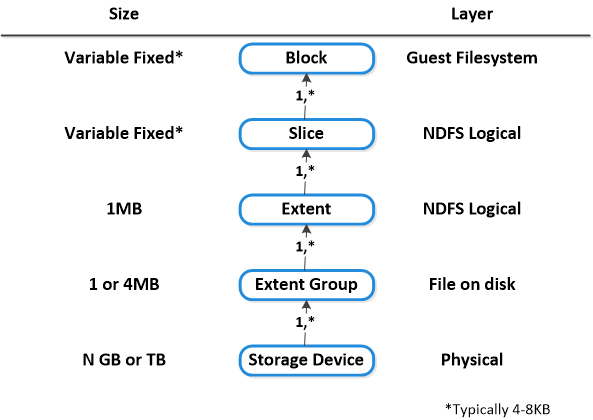

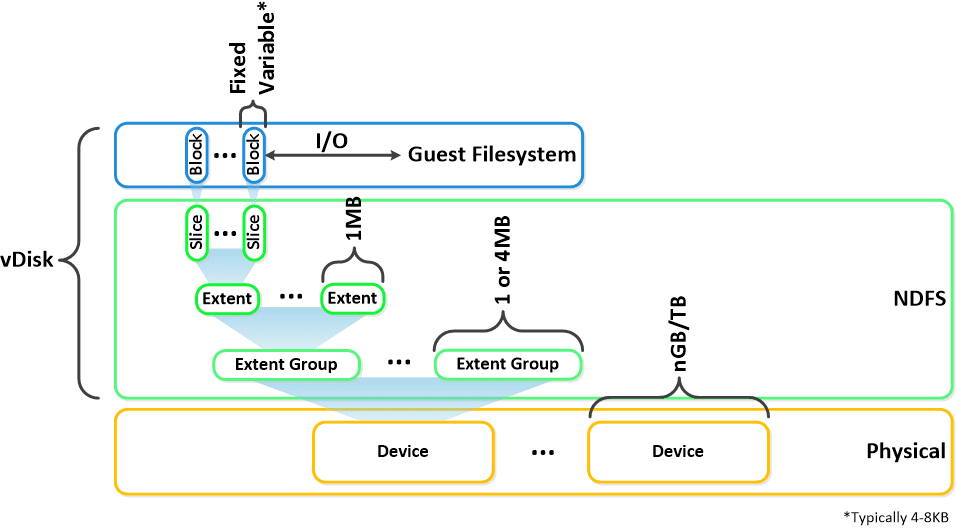

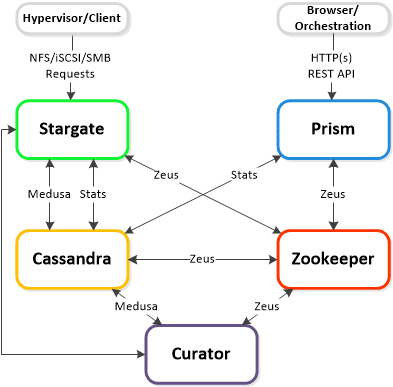

Here is another graphical representation of how these units are logically related:

Here is another graphical representation of how these units are logically related: Next up, I/O Path Overview

Next up, I/O Path Overview

Admin Portal

Admin Portal User Signin Portal

User Signin Portal User Main Portal

User Main Portal

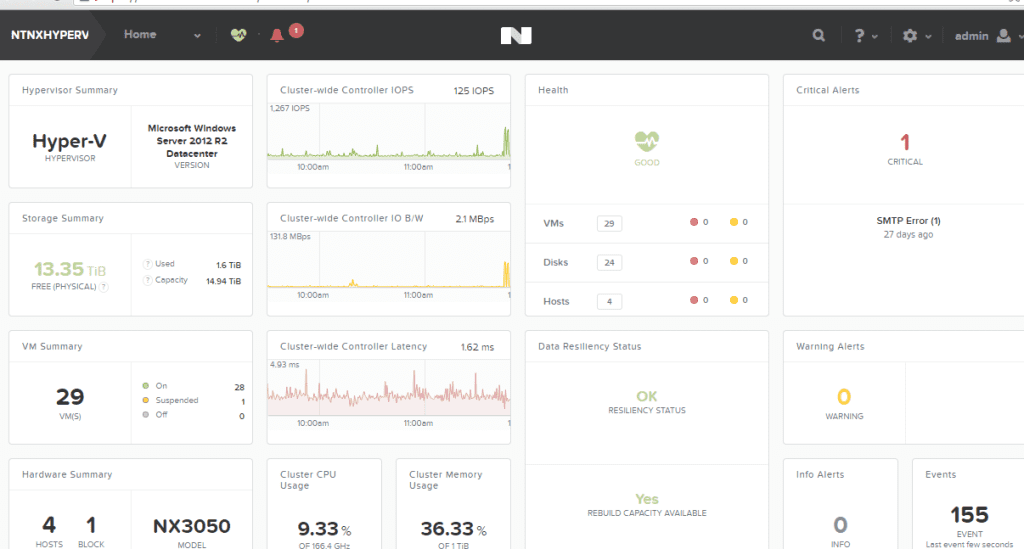

Another very exciting announcement was Nutanix Community Edition (CE) on June 9th, 2015 at our Inaugural .NEXT conference. So, what is it?…..Our website describes it the best “Community Edition is a 100% software solution enabling technology enthusiasts to easily evaluate the latest Hyperconvergence technology at zero cost.”

Another very exciting announcement was Nutanix Community Edition (CE) on June 9th, 2015 at our Inaugural .NEXT conference. So, what is it?…..Our website describes it the best “Community Edition is a 100% software solution enabling technology enthusiasts to easily evaluate the latest Hyperconvergence technology at zero cost.”