Before we go into what “Ready” really means. Every great journey has a story behind it. This will be a multi-part series starting with how I joined Nutanix and evolved myself to build a world-class program called “Nutanix Ready”. Stay Tuned, Part 1 coming very soon! Rob

Tag Archives: Web-Scale

Storage Spaces Direct Explained – Applications & Performance

Applications

Microsoft SQL Server product group announced that SQL Server, either virtual or bare metal, is fully supported on Storage Spaces Direct. The Exchange Team did not have a clear endorsement for Exchange on S2D and clearly still prefers that Exchange is deployed on physical servers with local JBODs using Exchange Database Availability Groups or that customers simply move to O365.

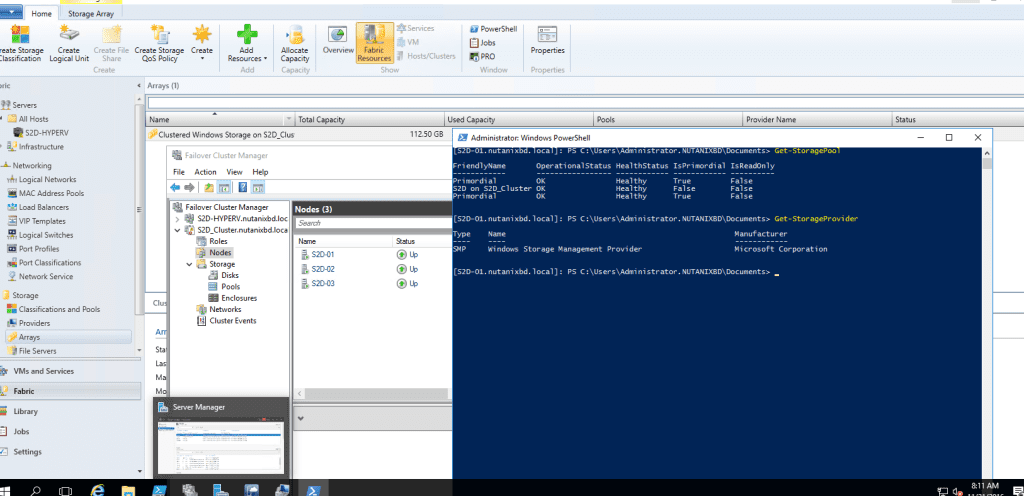

Storage Spaces Direct Explained – Management & Operations

Good day everyone. It been a few weeks, like busy with work and such. Anyways, this post will go into how Management & Operations are done in S2D. Now, my biggest pet peeve is complex GUI management and yet again, Microsoft doesn’t disappoint. It still a number of steps in different interfaces to bring up S2D, Check out Aidan Finns blog post on disaggregated management from last year. It still rings true to this day with the release of 2016. It shouldn’t be this complex IMO 🙁 That being said, let move to the details.

Continue reading

Continue reading

CPS Standard on Nutanix Released

Microsoft Exchange Best Practices on Nutanix

To continue on my last blog post on Exchange...

As I mentioned previously, I support SE’s from all over the world. And again today, I got asked what are the best practices for running Exchange on Nutanix. Funny enough, this question comes in quite often. Well, I am going to help resolve that. There’s a lot of great info out there, especially from my friend Josh Odgers, which has been leading the charge on this for a long time. Some of his posts can be controversial, but the truth is always there. He’s getting a point across.

Microsoft Exchange Documentor 1.0 – Planning Tool

Nutanix App for Splunk – Just Released

Nutanix App for Splunk

A Video Walkthrough on installation, configuration and demo of the Nutanix App for Splunk. Also, included is demo of Splunk Mobile running the Nutanix App versys Safari running Prism. To learn more about Splunk, and details on this app, check out Andre’s Leibovici @andreleibovici blog post. Happy Splunking 🙂

Until next time, Rob…

Nutanix NOS 4.6 Released….

On February 16, 2016, Nutanix announced the Acropolis NOS 4.6 release and last week was available for download. Along with many enhancements, I wanted to highlight several items, including some tech preview features.