Please note: Only the graphics are generated by OpenAI Model: Dalle3;

the rest is me 😉

The age of artificial intelligence (AI) is upon us, with advancements being blistering. Microsoft’s Azure AI is at the forefront of this revolution, providing a comprehensive suite of tools and services that enable developers, data scientists, and AI enthusiasts to create intelligent applications and solutions. In this blog post, we will delve deep into Azure AI and explore Azure AI Studio, a powerful platform that simplifies the creation and deployment of AI models.

What is Azure AI?

Azure AI is a collection of cognitive services, machine learning tools, and AI apps designed to help users build, train, and deploy AI models quickly and efficiently. It is part of Microsoft Azure, the company’s cloud computing service, which offers a wide range of services, including computing, analytics, storage, and networking.

Azure AI is built on the principles of democratizing AI technology, making it accessible to people with various levels of expertise. Whether you’re a seasoned data scientist or a developer looking to integrate AI into your applications, Azure AI has something for you.

Key Components of Azure AI

Azure AI consists of several key components that cater to different AI development needs:

- Azure Machine Learning (Azure ML): This cloud-based platform for building, training, and deploying machine learning models. It supports various machine learning algorithms, including pre-built models for common tasks.

- Azure Cognitive Services: These are pre-built APIs for adding AI capabilities like vision, speech, language, and decision-making to your applications without requiring deep data science knowledge.

- Azure Bot Service: It provides tools to build, test, deploy, and manage intelligent bots that can interact naturally with users through various channels.

Introducing Azure AI Studio (In Preview)

Azure AI Studio, also known as Azure Machine Learning Studio, is an integrated, end-to-end data science and advanced analytics solution. It combines a visual interface where you can drag and drop machine learning modules to build your AI models and a powerful backend that supports model training and deployment.

Features of Azure AI Studio

- Visual Interface: A user-friendly, drag-and-drop environment to build and refine machine learning workflows.

- Pre-Built Algorithms and Modules: A library of pre-built algorithms and data transformation modules that accelerate development.

- Scalability: The ability to scale your experiments using the power of Azure cloud resources.

- Collaboration: Team members can collaborate on projects and securely share datasets, experiments, and models within the Azure cloud infrastructure.

- Pipeline Creation: The ability to create and manage machine learning pipelines that streamline the data processing, model training, and deployment processes.

- MLOps Integration: Supports MLOps (DevOps for machine learning) practices with version control, model management, and monitoring tools to maintain the lifecycle of machine learning models.

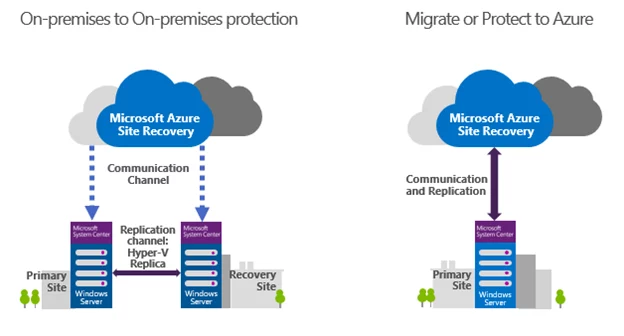

- Hybrid Environment: Flexibility to build and deploy models in the cloud or on the edge, on-premises, and in hybrid environments.

Getting Started with Azure AI Studio

To begin using Azure AI Studio, you usually follow these general steps:

- Set up an Azure subscription: If you don’t already have one, create a Microsoft Azure account and set up a subscription.

- Create a Machine Learning resource: Navigate to the Azure portal and create a new resource.

- Launch AI Studio: Launch Azure AI Studio from the Azure portal once your resource is ready.

- Import Data: Bring your datasets from Azure storage services or your local machine.

- Build and Train Models: Use the visual interface to drag and drop datasets and modules to create machine-learning models. Split your data, select algorithms, and train your models.

- Evaluate and Deploy: Evaluate your trained models against test data and deploy them as a web service for real-time predictions or batch processing once satisfied with the performance.

Use Cases for Azure AI

Azure AI powers a variety of real-world applications, including but not limited to:

- Healthcare: Predictive models for patient care, diagnosis assistance, and medical imaging analysis.

- Retail: Personalized product recommendations, customer sentiment analysis, and inventory optimization.

- Banking: Fraud detection, risk management, and customer service chatbots.

- Legal: Documentation creation, Legal Briefs, and ability to analyze a case with or without bias.

- Manufacturing: Predictive maintenance, quality control, and supply chain optimization.

Conclusion

Azure AI and Azure AI Studio are powerful tools in the arsenal of anyone looking to harness the power of artificial intelligence. With its comprehensive suite of services, Azure AI simplifies integrating AI into applications, while Azure AI Studio democratizes machine learning model development with its visual, no-code interface. The future of AI is bright, and platforms like Azure AI are more accessible than ever.

Azure AI not only brings advanced capabilities to the fingertips of developers and data scientists but also ensures that organizations can maintain control over their AI solutions with robust security, privacy, and compliance practices. As AI continues to evolve, Azure AI and Azure AI Studio will undoubtedly remain at the cutting edge, empowering users to turn their most ambitious AI visions into reality.

Until next time,

Rob

In an age where cyber threats are an unfortunate part of daily digital life, protecting your online accounts is paramount. I get asked or hear about a relative/friend getting hacked because of just having a simple or easily crackable password. One of the most effective ways to bolster your online security is through Multi-Factor Authentication (MFA), often referred to as Two-Factor Authentication (2FA). In this post, we’ll dive into the importance of MFA and guide you on activating it on popular social media platforms: LinkedIn and Facebook.

In an age where cyber threats are an unfortunate part of daily digital life, protecting your online accounts is paramount. I get asked or hear about a relative/friend getting hacked because of just having a simple or easily crackable password. One of the most effective ways to bolster your online security is through Multi-Factor Authentication (MFA), often referred to as Two-Factor Authentication (2FA). In this post, we’ll dive into the importance of MFA and guide you on activating it on popular social media platforms: LinkedIn and Facebook.

TikTok, a social media platform owned by ByteDance, a Beijing-based company, has taken the world by storm. Its short-form video content has attracted millions of users, particularly among the younger generation. However, as with any technology, it comes with its own set of risks and concerns. This blog post will delve into the technical dangers associated with TikTok, including data privacy, censorship, and potential misuse of the platform.

TikTok, a social media platform owned by ByteDance, a Beijing-based company, has taken the world by storm. Its short-form video content has attracted millions of users, particularly among the younger generation. However, as with any technology, it comes with its own set of risks and concerns. This blog post will delve into the technical dangers associated with TikTok, including data privacy, censorship, and potential misuse of the platform.

In today’s rapidly evolving digital landscape, businesses and developers increasingly use cloud marketplaces to access various applications, services, and tools. The leading cloud providers—Microsoft Azure, Amazon Web Services (AWS), and Google Cloud Platform (GCP)—each offer a unique marketplace experience catering to diverse needs and preferences. This comprehensive blog post will dive deep into the world of cloud marketplaces, comparing Azure, AWS, and Google on multiple dimensions, including user experience, available services, pricing, and more. Let’s get started!

In today’s rapidly evolving digital landscape, businesses and developers increasingly use cloud marketplaces to access various applications, services, and tools. The leading cloud providers—Microsoft Azure, Amazon Web Services (AWS), and Google Cloud Platform (GCP)—each offer a unique marketplace experience catering to diverse needs and preferences. This comprehensive blog post will dive deep into the world of cloud marketplaces, comparing Azure, AWS, and Google on multiple dimensions, including user experience, available services, pricing, and more. Let’s get started!